Aligning VR with reality

2022-09-07

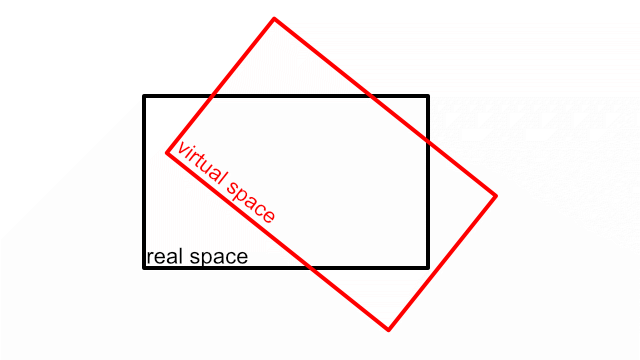

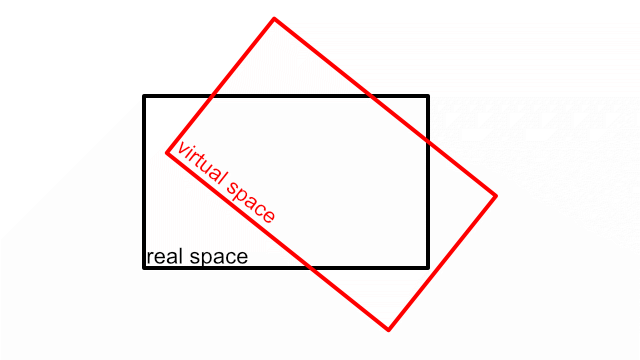

Unless you’re a ghost you’re probably going to take issue with walking into walls, so we need some way to align the virtual space with reality.

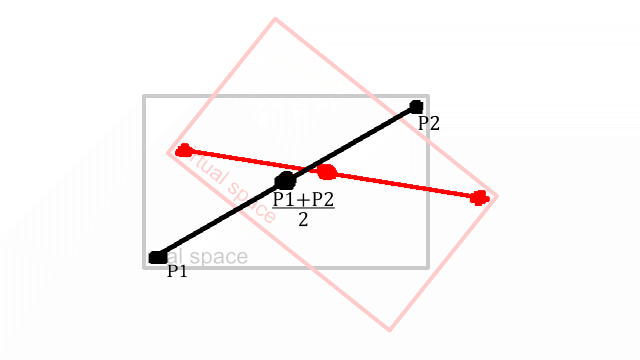

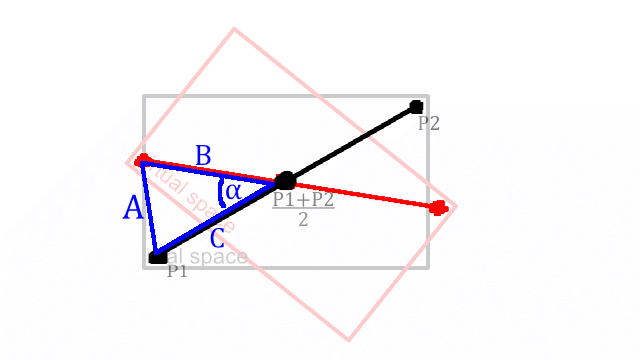

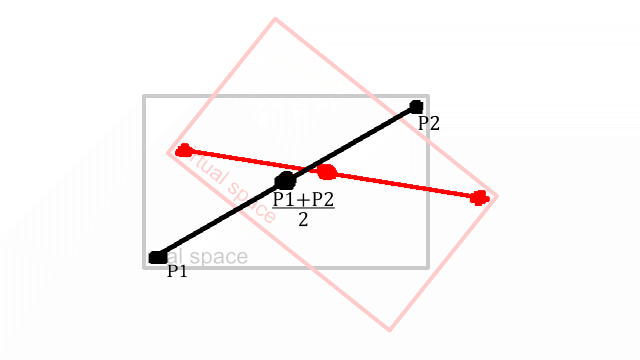

If we identify two opposite corners by touching them with the controllers we can average them to get the center point of the room. Since we already have full control over the virtual version of the room we can mark the same points out in the game level by placing some actors.

With this we can align the location of the rooms by adding the difference between the two midpoints to the location of the player pawn. You can think of reality as being “attached” to the player, since when the VR player is moved, their entire playspace moves with them.

Technically we could have already aligned the location with just a single point, but using the average of two also averages out any innacuracies or weirdness from using the controllers to mark points, and we need two points to figure out rotation anyway so we may as well get that extra bit of accuracy. Speaking of rotation, that’s what we need to do next.

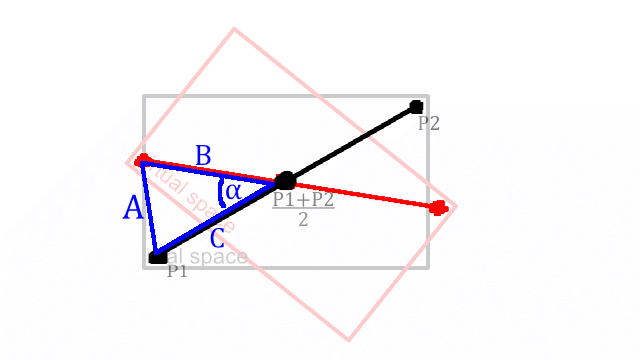

Now I don’t remember much from highschool maths, but what I do remember is TRIANGLES. They sure are some shape. You can do THINGS with triangles! This looks like a triangle problem to me.

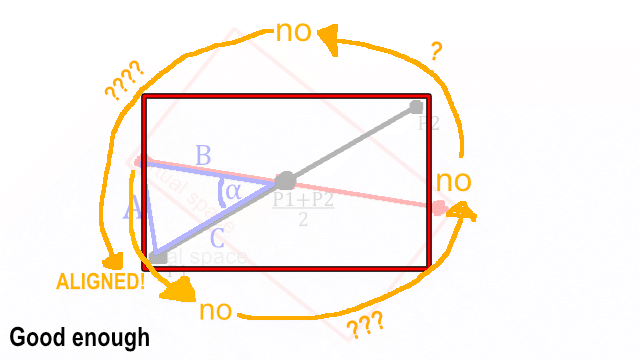

Luckily I know how to use a search engine and found out that there is indeed a formula for finding an angle in a triangle provided you know the triangle’s side lengths. In our case that’s the distances between the room’s mid point, the point we marked with the controller, and the corresponding point we want to align to.

The angle marked α is the rotational difference between the real and virtual spaces. If angle α is added to the player’s rotation on the Z axis it will rotate the real room through the game level so that it lines up with the virtual room. Here’s the formula for finding α:

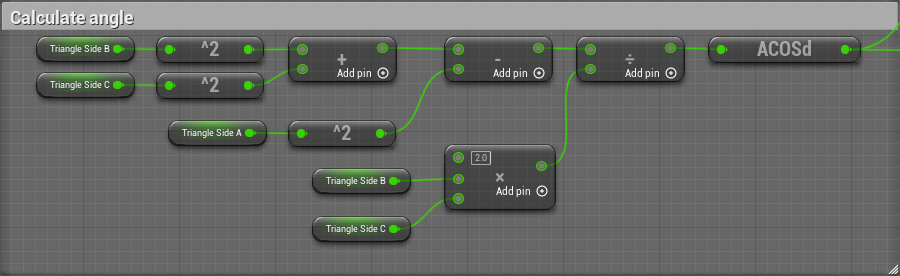

cos(α) = (B² + C² - A²)/ 2BC.

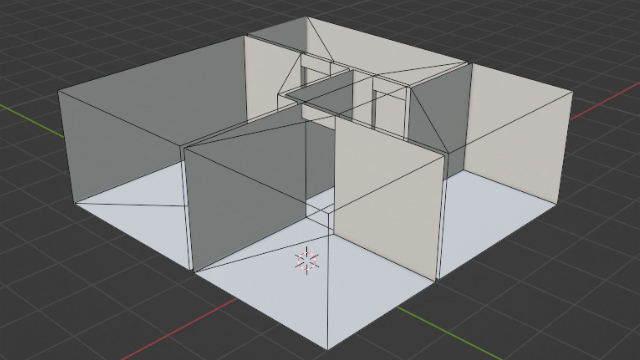

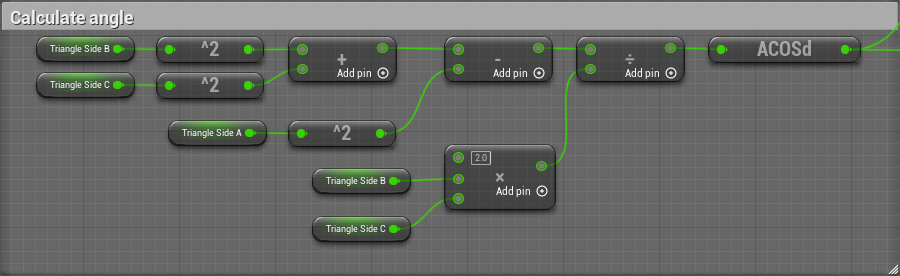

In unreal engine blueprints, that looks like this:

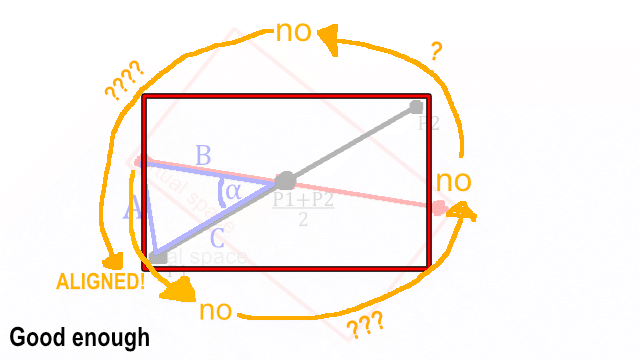

This works great in theory. In reality, of course, it doesn’t actually work at all. I could spend time figuring out why it’s going wrong, however it turns out if you beat the shit out of the function it eventually returns the correct angle. After it does this, running it again will only result in it returning zero, because when the room is aligned with reality there is no rotational difference. So I can just run it on loop until it returns zero. Hooray for magic workarounds.